This week we learned geoprocessing in ArcGIS Pro via the Modelbuilder and Python.

First, I built a model in the ModelBuilder. My goal:

- Clips all soils to the extent of the basin

- Selects all soils that are classified as "Not prime farmland" under the [FARMLNDCL] attribute

- Erases the "Not prime farmland" soil selection from the basin polygon

First, I utilized

the Clip tool to remove the Soil areas (input feature) from the Basin area

(clip feature). [I think of the layers of the cookie dough sheet (clip feature)

vs the cookie cutter (input feature).]

Second, I used the

clipped data from step one to Select only the areas classified as ‘Not prime farmland,”

utilizing the query Where FARMLNDCL is equal too ‘Not prime farmland.’ Here the

attribute ‘Not prime farmland’ comes from the clipped clipped soil layer.

Lastly, I used the erased the selected data (Erase feature) from step 2 from the original soil shapefile (input feature).

The second part of the lab, I used python. Our desired outcomes were:

- Adds XY coordinates to the hospitals shapefile

- Creates 1000m buffer around the hospitals

- Dissolves the hospital buffers into a single-feature layer

- Prints geoprocessing messages after each tool runs

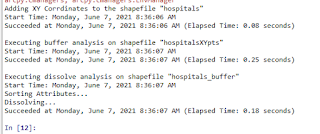

To begin and add the XY coordinates, I based the script completely off of the ESRI help link.

arcpy.management.AddXY(in_features)I know I had to add an overwrite component and used ‘arcpy.overwriteOutput

= True’ line based on our practice exercise. To see the desired history method for each script I used the print (arcpy.GetMessages()) syntax I learned in the exercise as

well. I also manually created a custom print statement, stating what tool was running before the GetMessages statement.

Also going off of the practice exercise, I

used the following for the buffer script:

Arcpy. Buffer_managment (‘file’ , ‘file

path’ , ‘buffer distance’)

The last step was the trickiest for me, dissolving the buffered file as I got a bit lost in the syntax.

arcpy.management.Dissolve(in_features, out_feature_class, {dissolve_field}, {statistics_fields}, {multi_part}, {unsplit_lines})

I used the above script, utilizing BUFF_DIST as the dissolve field, leaving the stat field blank (“”), using single rather than multi_part, and lastly ‘DISSOLVE_lines’.

n

Comments

Post a Comment